Human + AI, better together.

Align early, Grade smarter.

Validate spreadsheets. Share instant reports.

Assess for Learning

Highlights

- Pre‑launch calibration with synthetic submissions

- AI Copilot for flexible, auditable grading

- Excel formula validation alongside numeric checks

- One‑click Examiner’s Report across sessions

Pre-launch

Train the Grader Mode

Before the first script is marked, create alignment. Generate synthetic submissions for each grader, practice applying criteria, and review readiness with alignment reports.

- Create a bank of synthetic attempts that reflect your rubric

- Run calibration rounds; capture rationales and edge‑cases

- Sign‑off when variance is inside tolerances

What’s included

- Synthetic submission generator

- Calibration rounds & variance tracking

- Readiness & alignment report export

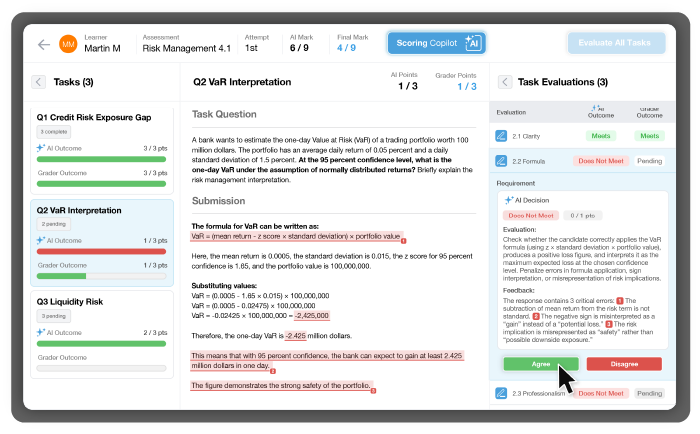

During grading

AI Copilot

Choose the flow that fits your governance. Let AI grade first for speed, then review; or grade internally first, then compare with AI. Mix and match outcomes and comments.

- Side‑by‑side comparison of human and AI outcomes

- Selective adoption of scores and feedback

- Audit trail with who/what/when for each decision

Controls

- Policy‑based thresholds for auto‑accept vs human review

- Role‑based permissions and lock‑step workflows

- Exportable evidence for QA and appeals

Spreadsheet assessments

Formula Validation

Before the first script is marked, create alignment. Generate synthetic submissions for each grader, practice applying criteria, and review readiness with alignment reports.

- Rules > Actions toggle to enable checks

- Support for common Excel functions and ranges

- Feedback that differentiates method vs outcome

Why it matters

- Credit methodology as well as final answers

- Reduce manual rework and back‑and‑forth

- Improve consistency across graders

Competency frameworks

Diagnostics Copilot

Connect grading outcomes directly to your competency framework. Model it once and the platform auto‑tags each new assessment so results roll up cleanly to competencies.

- Heat‑map each learner against domains and levels

- Highlight strengths and focus areas with targeted feedback

- Deliver personalized outcomes in the candidate’s report

What’s included

- Framework modeler for domains, levels and descriptors

- Automatic tagging of items & outcomes across assessments

- Cohort and item heat‑maps with report integration

Post‑session

Examiner’s Report

Turn results into meaningful insight in minutes. Generate an overall performance summary, highlight strengths and weaknesses, and drill into every evaluation.

- One‑click cohort summary with visuals

- Exportable to PDF/CSV

- Share with educators and learners

What you get

- Summary trends & variance

- Item‑level analysis and exemplars

- Recommendations for improvement

Security & Integrations

- SSO (SAML/OIDC) and RBAC

- Data isolation and configurable retention

- EU AI Act, NCME, NCCA and GDPR ready

Deployment options

- Cloud with regional data residency

- Private tenant options

- API and Salesforce connector for custom workflows

Assess for Learning FAQ’s

Q. Does AI replace our graders?

A. No. AI Copilot augments graders and

keeps educators in control. You decide the

flow and acceptance policy.

Q. What about privacy?

A. We don’t train foundation models on

your data. You control retention and

residency; SSO & RBAC enforce access.

Q. Can we use this in high‑stakes

contexts?

A. Yes. The platform is designed for

credentialing associations and regulators

with auditability and governance in mind.

Q. How do we get started?

A. Book a demo. We’ll map a pilot to your

rubric and show an end‑to‑end flow,

including the Examiner’s Report.

Enable your Digital Learning Workforcewith 35+ Years of Proven Expertise

Unlock real ROI without the complexity. See how our tailored servicepackages can propel your organization forward—fast.